BoltzGen, One Protein Is All You Need, SynFrag, and GEMS

Kiin Bio's Weekly Insights

Welcome back to your weekly dose of AI news for Life Science!

What’s your biggest time sink in the drug discovery process?

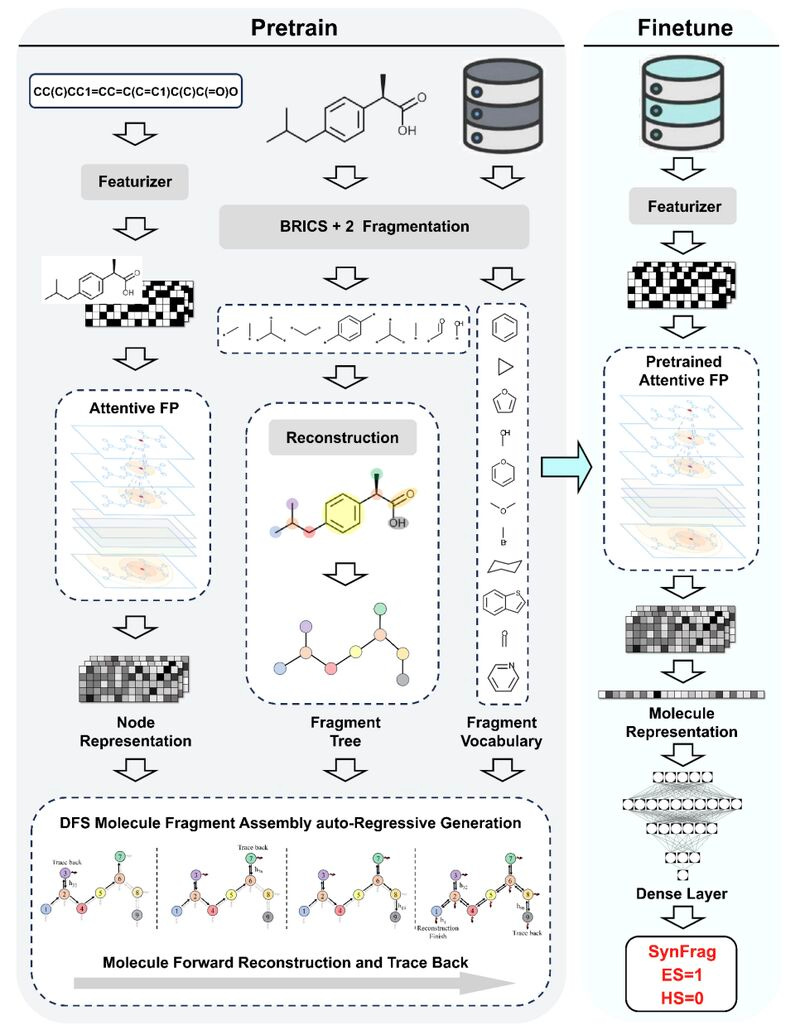

⚛️ BoltzGen: Unifying protein design and structure prediction for universal binder generation

Simulating molecular motion at atomic resolution is powerful but painfully slow.

Classical molecular dynamics offers precision but limited timescales, while coarse-grained models gain speed at the cost of physical detail.

BoltzGen, developed by researchers at EPFL and IBM Research, takes a new approach.

It is a generative model that learns the Boltzmann distribution of 3D molecular configurations directly from simulation data, producing realistic conformations without long MD runs.

Once trained, BoltzGen can generate equilibrium molecular structures that reflect both local flexibility and global diversity, while preserving the physical symmetries of real systems.

🔬 Applications and Insights

1️⃣ Learning from molecular data

BoltzGen approximates the Boltzmann distribution of molecular structures using deep generative modelling, capturing how atoms or coarse-grained beads arrange in space without solving motion equations.

2️⃣ Physics-preserving representation

The network encodes rotational, translational, and permutational invariance, ensuring that sampled structures obey molecular symmetries.

3️⃣ Fast equilibrium sampling

Once trained, BoltzGen generates statistically valid configurations orders of magnitude faster than molecular dynamics, offering quick access to conformational ensembles.

4️⃣ Generalisation across systems

Tested on peptides, short polymers, and small organic molecules, the model reproduces realistic coarse-grained distributions and generalises across related systems.

💡 Why It’s Cool

BoltzGen brings deep learning into the realm of statistical mechanics.

Instead of simulating trajectories frame by frame, it learns the distribution itself, the energy landscape that defines how molecules fold and fluctuate.

It samples molecular structures that are both physically meaningful and computationally efficient, bridging the gap between brute-force simulation and generative physics.

A faster, data-native way to explore molecular conformations and energy landscapes.

📄Check out the paper!

⚙️Try out the code.

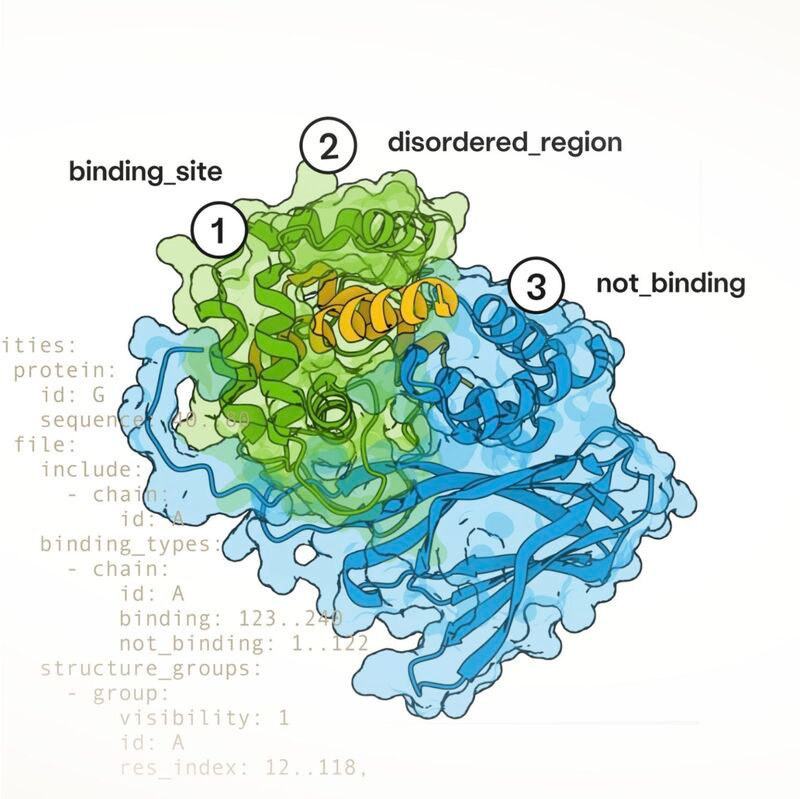

💥 CleanSplit + GEMS: Cleaning up AI binding prediction.

Most models that claim to predict how tightly a molecule binds a protein don’t actually learn chemistry. They learn the data.

A team from ETH Zurich, University of Bern, and Yale discovered that the field’s standard benchmark: PDBbind → CASF is deeply biased.

Nearly half of the test set overlaps with the training data, meaning most models have been graded on examples they’ve already seen.

To fix it, they built PDBbind CleanSplit, a filtered dataset that removes redundant proteins and ligands. When leading models like Pafnucy and GenScore were retrained on CleanSplit, their performance dropped by as much as 40%, exposing how much “accuracy” was really memorisation.

Then they built something new. GEMS: the Graph neural network for Efficient Molecular Scoring, predicts protein-ligand binding by learning actual interactions, not patterns.

🔬 Applications and Insights

1️⃣ Clean data, real generalisation

CleanSplit eliminates structural and ligand redundancy between training and test sets, producing the first truly independent benchmark for binding prediction.

2️⃣ Revealing benchmark bias

Retraining leading models on CleanSplit showed dramatic drops in R² scores (up to 0.4), proving how data leakage inflated reported accuracy across the field.

3️⃣ Learning interactions, not memorising structures

GEMS depends on protein-ligand context. When protein nodes are removed, its performance collapses: a clear sign it’s modelling physics, not recall.

4️⃣ Fast, robust, and transferable

By combining embeddings from ESM2, Ankh, and ChemBERTa, GEMS learns generalisable features across targets. It trains 25× faster than Pafnucy and 100× faster than GenScore.

💡 Why It’s Cool

This study resets the benchmark for AI in drug design. It shows that most binding predictors aren’t broken, they were just trained on biased data.

By fixing the data, the team built a model that actually understands molecular interaction space. GEMS delivers accuracy, speed, and transparency, the foundations real progress depends on.

📄Check out the paper!

⚙️Try out the code.

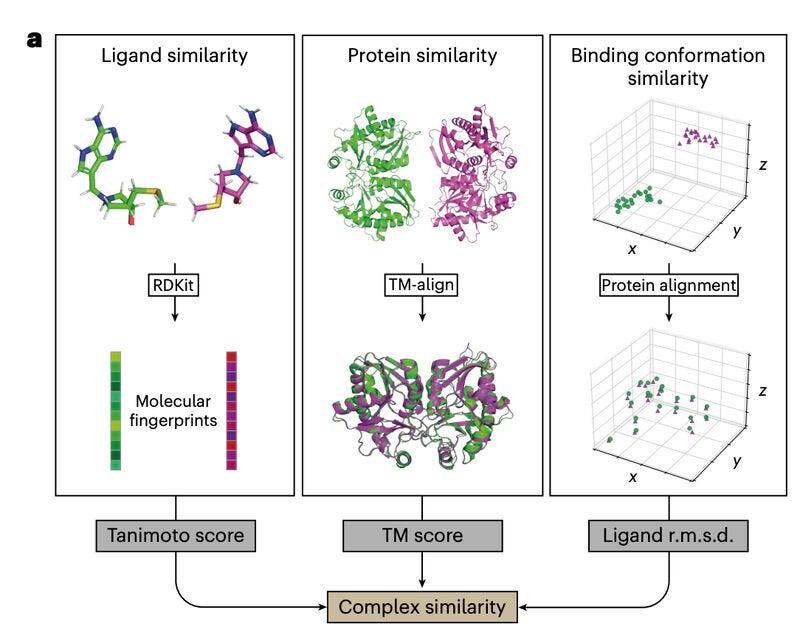

🧬 One Protein Is All You Need: Teaching protein language models to specialise at test time

Protein language models usually rely on huge datasets.

Millions of sequences, countless alignments, and evolutionary histories are used to teach AI what makes proteins work.

What if all that information could come from a single protein?

Researchers at CTU, Seoul National University, in collaboration with MIT have shown it can. Their new framework, One Protein Is All You Need (OPIA), trains large language models directly on the mutational landscape of a single protein, learning structure, function, and fitness without sequence databases or alignments.

By training on deep mutational scanning (DMS) data, OPIA predicts how amino acid substitutions affect protein stability and activity, even for unseen variants. All of this comes from the experimental data of one protein.

🔬 Applications and Insights

1️⃣ Single-protein learning

Instead of millions of sequences, OPIA learns from one. It captures the local rules of folding, binding, and activity directly from measured mutations.

2️⃣ Accurate fitness prediction

On 10 benchmark proteins, OPIA matches or exceeds large-scale protein models, predicting mutational fitness and stability with strong correlation to experiment.

3️⃣ Generalisation from local context

The model learns residue dependencies that mirror real structural and functional coupling, allowing it to predict unseen mutations accurately.

4️⃣ Data-efficient biology

OPIA shows that deep learning can uncover functional rules from single-protein experimental data, making it possible to model protein behaviour directly from lab measurements.

💡 Why It’s Cool

This study reverses the usual logic of protein modelling.

Instead of training bigger models on massive databases, it learns deeply from one molecule, discovering how it works through data generated in the lab.

It is a glimpse of what lab-native AI could look like, where models learn directly from experimental feedback rather than from millions of internet sequences.

Sometimes, one protein really is all you need.

📄Check out the paper!

⚙️Try out the code.

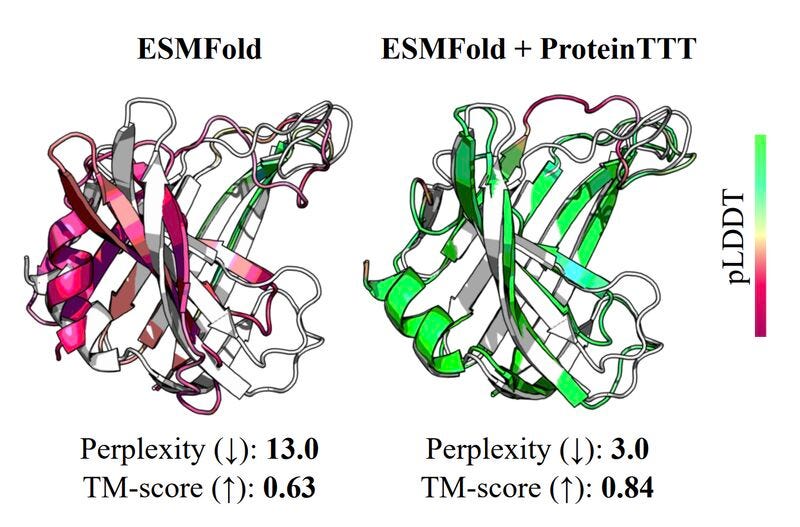

🧪 SynFrag: Bridging AI Molecule Design and Real-World Synthesis

AI can now generate molecules faster than chemists can make them.

But most of those digital compounds can’t actually be synthesised. This “generation-synthesis gap” is one of the biggest hurdles in AI-driven drug design.

Researchers at the Shanghai Institute of Materia Medica and Nanjing University of Chinese Medicine developed SynFrag, a deep learning model that predicts whether a molecule can be made and how hard it will be.

Instead of relying on fragment frequency or reaction templates, SynFrag learns how chemists think. It’s trained to assemble molecules piece by piece, learning realistic construction patterns from 9.18 million unlabelled molecules. This lets it spot subtle “synthesis difficulty cliffs,” where a tiny change makes a molecule far harder to make.

🔬 Applications and Insights

1️⃣ Fast, interpretable predictions

Produces sub-second synthetic accessibility scores, over 10,000× faster than CASP tools, while highlighting likely reactive or challenging sites.

2️⃣ Generalises across real and AI-generated compounds

Achieved AUROC 0.945 on clinical drugs and 0.889 on AI-designed molecules, outperforming all existing models including DeepSA and BR-SAscore.

3️⃣ Detects “synthesis cliffs”

Identifies when small structural tweaks like an added ring or functional group make a design unsynthesisable, helping chemists prioritise realistic analogues in lead optimisation.

4️⃣ Visual reasoning at scale

The open-access SynFrag web platform (synfrag.simm.ac.cn) supports up to 250,000 molecules per batch, visualising atom-level attention weights and integrating with AiZynthFinder or SYNTHIA for retrosynthetic planning.

💡 Why It’s Cool

SynFrag captures something closer to human synthesis logic than any previous model.

It doesn’t just count fragments, it learns how molecules come together, step by step, and uses that knowledge to judge what’s actually possible.

By uniting interpretability, scale, and chemical realism, SynFrag helps close the gap between AI molecule generation and lab synthesis, moving computational design a step closer to the bench.

📄Check out the paper!

⚙️Try out the code.

Thanks for reading!

Did you find this newsletter insightful? Share it with a colleague!

Subscribe Now to stay at the forefront of AI in Life Science.

Connect With Us

Have questions or suggestions? We'd love to hear from you!

📧 Email Us | 📲 Follow on LinkedIn | 🌐 Visit Our Website