CeMM’s CELLFIE, WEHI’s ProteinDJ, and MPI + Helmholtz’s scPortrait

Kiin Bio's Weekly Insights

Welcome back to your weekly dose of AI news for Life Science!

What’s your biggest time sink along the drug discovery process?

CELLFIE: High-content CRISPR screening platform to optimise CAR T cell therapies

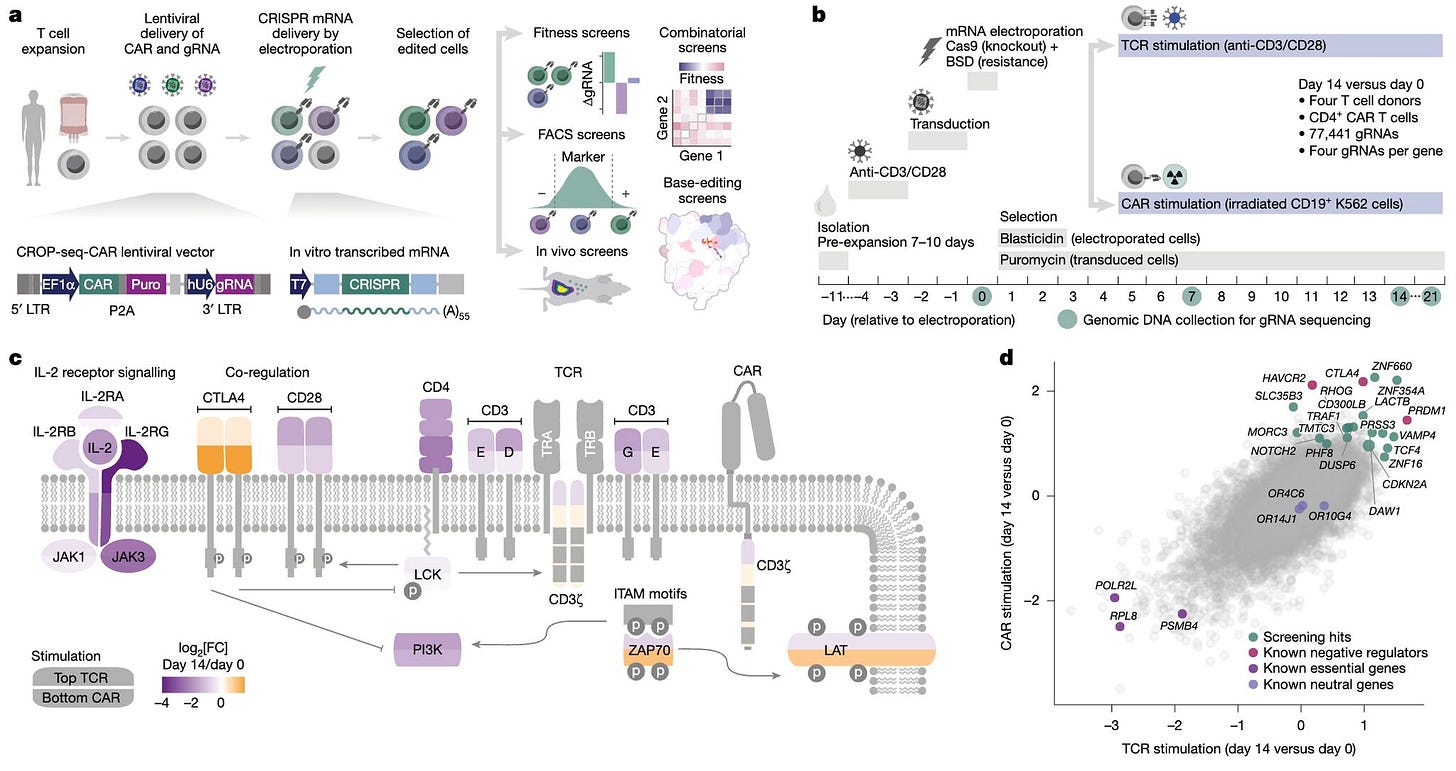

A new study from Arc Institute and CeMM introduces CELLFIE, a CRISPR screening platform that enables systematic, large-scale engineering of human primary CAR T cells. By combining efficient mRNA-based CRISPR delivery with pooled gRNA libraries, the platform makes it possible to interrogate T cell function under clinically relevant conditions.

Large-scale functional genomics has mostly been limited to immortalised cell lines. Primary human T cells, the foundation of CAR T therapy, have been much harder to engineer at scale. CELLFIE closes that gap, supporting knockout, activation, and base-editing screens directly in CAR T cells.

Using this approach, the team performed 58 pooled CRISPR screens across diverse contexts, uncovering both known immune checkpoints and novel regulators of persistence, survival, and exhaustion.

Applications and Insights

1. Genome-wide scale in primary T cells

CELLFIE enabled pooled perturbations across 58 screens, spanning activation, apoptosis, fratricide, and checkpoint pathways.

2. Synergistic perturbations

Combinatorial editing revealed that dual knockouts like RHOG + FAS boosted tumour control beyond single edits in mouse models.

3. High-resolution base editing

A tiling library of 3,755 gRNAs mapped critical residues in RHOG, pointing to specific sites for precise base-editing.

4. Lower cost, higher throughput

Cas9 mRNA production reduced editing costs by ~10×, making hundreds of large-scale screens feasible.

What about the training data?

CELLFIE is a platform rather than a pretrained model, so its “data” are functional outputs from pooled CRISPR perturbations:

Knockout screens: genome-wide gRNA libraries delivered to CAR T cells from multiple human donors.

Combinatorial screens: 238 dual-gRNA pairs tested across CAR designs with varied costimulatory domains.

Base-editing screens: 3,755 gRNAs tiling RHOG and PAC, combined with ABE and CBE editors.

All raw and processed outputs are available in GEO and Supplementary Tables, including in vivo tumour control datasets.

I thought this was cool because it brings systematic discovery directly into primary human cell therapies. Instead of piecemeal testing, researchers can now map pathways and perturbations at genome-wide scale in the same T cells used for therapy. That makes CELLFIE not just a screening tool, but a foundation for rational CAR T engineering.

📄Check out the paper!

⚙️Data and methods are fully open-source for academic use and licensable for translation.

scPortrait: Standardised single-cell imaging framework for multimodal integration

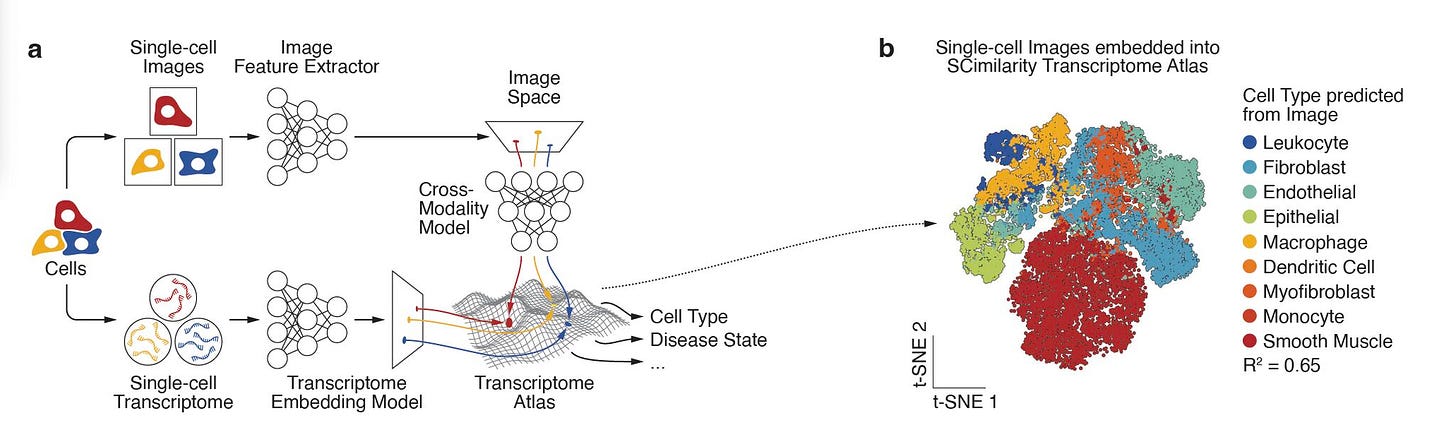

The Max Planck Institute of Biochemistry and Helmholtz Munich introduces scPortrait, a software and file format that transforms raw microscopy into standardised single-cell image datasets.

Single-cell images have always been rich with information, but unfortunately they lacked the kind of standardisation that transcriptomics and proteomics enjoy. That made it hard to integrate images into large-scale modelling or multimodal foundation models. scPortrait fixes that. It reads, stitches, and segments raw fields of view, extracts individual cells, and saves them into a new .h5sc format designed for fast access and seamless integration with scverse tools.

The result: images become a reusable, machine learning–ready modality, on par with sequencing data. Exciting.

Applications and Insights

1. High-throughput extraction

scPortrait processes raw microscopy at scale with CPU/GPU parallelisation, extracting 700+ cells per second into single-cell datasets.

2. Standardised, FAIR format

The .h5sc format builds on HDF5/AnnData, enabling reproducible, shareable image datasets with fast random access which is critical for training modern ML models.

3. Cross-modality integration

In tonsil tissue, scPortrait mapped CODEX imaging data onto CITE-seq gene expression using optimal transport and flow matching, recovering missing markers like TCL1A.

4. Revealing hidden cell states

Applied to ovarian cancer tissue, image embeddings identified macrophage subpopulations with distinct spatial niches and transcriptomic signatures, showing morphology alone can uncover meaningful heterogeneity.

What about the training data?

scPortrait is not a pretrained model but a data infrastructure tool, so its “training data” are the image datasets it processes and standardizes. In the paper, the team demonstrated it on several large-scale microscopy experiments:

CODEX imaging of human tonsil tissue, generating single-cell portraits that were aligned to CITE-seq transcriptomes.

Ovarian cancer tissue sections, where image embeddings revealed distinct macrophage states with different spatial niches.

Large-scale microscopy benchmarks, with scPortrait extracting up to 700+ single cells per second, producing tens of thousands of single-cell images per dataset.

I thought this was cool because it finally makes images a first-class citizen in systems biology. Instead of just being downstream illustrations, single-cell images can now sit alongside sequencing and proteomics as inputs for multimodal learning.

So for labs building foundation models or studying tissue organization, this prioritisation of imaging is the kind of infrastructure shift and that could accelerate the whole field (hopefully). It’s no wonder the tool is getting the attention it has!

📄Check out the paper!

⚙️Try it out the code.

ProteinDJ: Modular HPC pipeline for scalable protein binder design

The Walter and Eliza Hall Institute of Medical Research and the University of Melbourne introduces ProteinDJ, a modular pipeline that makes protein binder design efficient on high-performance computing (HPC) systems.

Designing functional binders with AI tools like RFdiffusion, ProteinMPNN, and AlphaFold often takes thousands of designs and hundreds of GPU hours. That makes the process expensive and hard to scale. ProteinDJ tackles this by containerising the entire workflow with Nextflow and Apptainer, parallelising it across CPUs and GPUs, and adding smart filtering at each step. The result is a system that can generate and validate hundreds of designs per hour, turning HPC clusters into binder discovery engines.

The pipeline is modular, letting researchers swap in different sequence design and structure prediction tools. It also includes Bindsweeper, a parameter sweep tool that systematically tests design settings, helping to optimise workflows before launching big campaigns.

Applications and Insights

1. Near-linear HPC scaling

On 8 NVIDIA A30 GPUs, ProteinDJ designed and evaluated 4,000 sequences in under 2.5 hours, compared to almost 17 hours on a single GPU which is a 7× speedup at 86.5% parallel efficiency.

2. Flexible and modular

Users can choose between ProteinMPNN or Full-Atom MPNN for sequence design, and AlphaFold2 or Boltz-2 for validation. This modularity makes it future-proof as new tools arrive.

3. Higher in silico success rates

Benchmarking showed that combining ProteinMPNN with FastRelax improved binder success rates across multiple targets, reaching up to 52.3% for PD-L1.

4. Smarter exploration

With Bindsweeper, users can run multi-parameter sweeps to identify the best design conditions, saving compute cycles and increasing hit rates before wet-lab validation.

What about the training data?

ProteinDJ is not a new pretrained model, but a workflow framework that orchestrates existing design tools. Its “training data” therefore comes from the underlying models it runs:

RFdiffusion and Boltz-2 were trained on curated protein structures from the Protein Data Bank (PDB).

ProteinMPNN and Full-Atom MPNN were trained on backbone-sequence pairs from high-quality crystal structures.

AlphaFold2 was trained on sequence-structure data from UniProt and the PDB.

ProteinDJ itself doesn’t require retraining. It wraps these pretrained models into a reproducible, containerized pipeline, enabling scalable runs on HPC. Benchmarking in the paper was done on public protein-target datasets like PD-L1, IL-7Rα, and InsulinR.

I thought this was cool because it takes the binder design workflows we already know; RFdiffusion, ProteinMPNN, AlphaFold, and makes them usable at scale. Instead of researchers fighting with installs or bottlenecking a workstation, ProteinDJ turns HPC systems into efficient, modular, and user-friendly protein design pipelines. For those groups aiming to run large binder campaigns or compare design strategies, it’s a seriously practical and real step forward.

📄Check out the paper!

⚙️Try it out the code.

Thanks for reading!

Did you find this newsletter insightful? Share it with a colleague!

Subscribe Now to stay at the forefront of AI in Life Science.

Connect With Us

Have questions or suggestions? We'd love to hear from you!

📧 Email Us | 📲 Follow on LinkedIn | 🌐 Visit Our Website